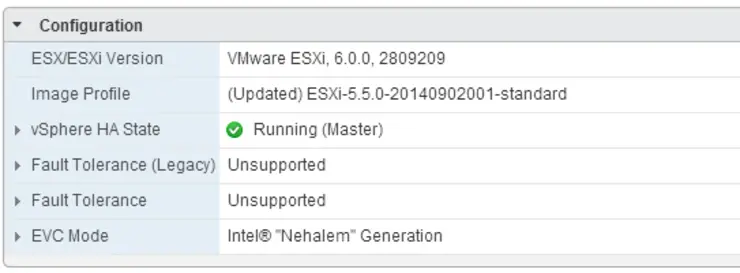

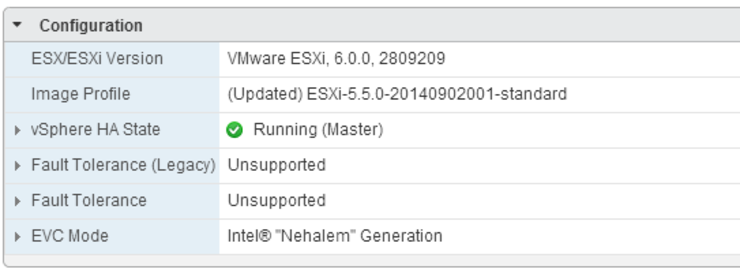

I recently rebuilt my lab and added 2x new ESXi hosts, I re-used my old single host in the process which I upgraded from ESXi 5.5 to 6.0 and patched to the same level as the new hosts.

Everything was working as expected until it came for the time to enable HA.

My old host claimed the master roll and thus the other boxes had to connect to it as slaves, however, these failed with “HA Agent Unreachable” and “Operation Timed Out” errors.

After some host reboots, ping, nslookup and other standard connectivity tests with still no progress I started blaming the ESXi 5.5 -> 6.0 upgrade this was, as it turns out, unfounded.

Looking at the /var/log/fdm.log on the master host the following lines could be seen:

SSL Async Handshake Timeout : Read timeout after approximately 25000ms. Closing stream <SSL(<io_obj p:0x1f33f794, h:31, <TCP 'ip:8182'>, <TCP 'ip:47416'>>)>

Further along we could see that it knows the other hosts are alive:

[ClusterDatastore::UpdateSlaveHeartbeats] (NFS) host-50 @ host-50 is ALIVE

And further along again:

[AcceptorImpl::FinishSSLAccept] Error N7Vmacore16TimeoutExceptionE(Operation timed out) creating ssl stream or doing handshake

On the slave candidates this could be seen:

[ClusterManagerImpl::AddBadIP] IP 1{master.ip.address.here} marked bad for reason Unreachable IP

After yet more troubleshooting and messing about with SSL cert regeneration I stumbled upon this ↗:

This issue occurs when Jumbo Frames is enabled on the host Management Network (VMkernel port used for host management) and a network misconfiguration prevent hosts communicating using jumbo frames. It is supported to use jumbo frames on the Management Network as long as the MTU values and physical network are set correctly.

Checked the vmk0 MTU on my master host - sure enough, I had configured this as 9000 back in the day and completely forgotten about it, bumped it back down to 1500, HA agents came up right away:

Hopefully this saves you some time and you don’t have to go through what I did trying to solve this.

Why not follow @mylesagray on Twitter ↗ for more like this!